Course Description

This course is intended for students advancing in the study of robotic engineering. The focus is on the problems of how a robot can learn to perceive the physical world well enough to act in it and make reliable plans. We have constantly been updating the course syllabus throughout the years. This semester, we will use the DeepClaw toolkit for basic training in machine vision and tactile learning and adopt the RoboSuite toolkit and the teaching format developed by Dr. Zhu Yuke’s team at UT Austin for simulation and learning.

- Learn the basics of building robotic systems with vision-based machine learning and AI in the real world;

- Understand the technical challenges arising from building learning-based robotic manipulation systems;

- Get familiar with a variety of modal-driven and data-driven principles and algorithms on robot learning;

- Be able to evaluate, communicate, and apply AI-based techniques to problem-solving in robotics.

Course Prerequisite

- Knowledge of basic data structures and algorithms, as well as practical skills in computer programming.

- Proficiency in Python is required, and high-level familiarity with C/C++ is a plus.

- Familiarity with calculus, statistics, and linear algebra. Strong mathematical skills are required.

- Coursework and/or equivalent experience in Robotics, Machine Learning, and AI are preferred.

- Be passionate, patient, and fearless when working with Robotics + AI systems.

Course Instructor & Teaching Support

- Lead Instructor: Dr. Song Chaoyang (songcy@sustech.edu.cn)

- Office Location: Room 517, North Block, Engineering Building

- Office Hours: 10:00~12:00 on Mondays

- Teaching Assistant 1: Wu Tianyu (12332365@mail.sustech.edu.cn)

- Teaching Assistant 2: Huang Bangchao (12332379@mail.sustech.edu.cn)

- Technical Support: Sun Haoran (11610409@mail.sustech.edu.cn)

- Student Assistant: Zhang Zishang (12012305@mail.sustech.edu.cn)

- Administrative Assistant: Fu Tian (fut@mail.sustech.edu.cn)

- For the lab session, we will use a set of tools developed by previous students of this class called DeepClaw. The teaching assistants are all previous course members, and some are the core developers of the latest version.

- The latest version won the 1st prize in the 2022 Capstone Project, and we have already pilot-tested it for three semesters in multiple courses offered by the SUSTechDL group.

- It is imperfect, and we hope to keep iterating the design to enhance your learning experience.

- Thank you very much for your understanding, patience, and cooperation.

Grading Policy

- Attendance: 10%

- Project #1 on DeepClaw Assignment: 20%

- In-class Workshop 1/2 (10%)

- Project #2 on Paper Review: 30%

- 1st/2nd/3rd Paper Review (10%)

- Project Proposal Presentation (5%)

- Project Milestone Presentations (5%)

- Final Report and Codes (15%)

- Spotlight Presentation Video (5%)

- Submission Deadline

- Mar 10 @ 23:30: 1st Paper Review PowerPoint

- Mar 17 @ 23:30: DeepClaw Project Report 1

- Mar 24 @ 23:30: Project Proposal PowerPoint

- Apr 07 @ 23:30: 2nd Paper Review PowerPoint

- Apr 07 @ 23:30: DeepClaw Project Report 1

- Apr 14 @ 23:30: Project Milestone PDF

- May 12 @ 23:30: 3rd Paper Review PowerPoint

- Jun 02 @ 23:30: Final Report and Codes

- Jun 06 @ 23:30: Spotlight Presentation Video

- Course Feedback Form

- Apr 11 @ 23:30: Mid-term Course Feedback

- Jun 06 @ 23:30: End-of-term Course Feedback

Academic Integrity

- This course follows the SUSTech Code of Academic Integrity. Students in this course must abide by the SUSTech Code of Academic Integrity. Any work submitted by a student in this course for academic credit will be the student’s work. Violations of the rules will not be tolerated.

University Calendar

Lecture & Lab Notes

Since this course is equivalent to the Machine Learning credit requirement (one of the four required courses on machine learning for Robotic Engineering), we will cover extensive content on ML-related subjects in the lectures. If you are keen to learn more about it, you are recommended to refer to the following learning resources:

- CS329P: Practical Machine Learning: Book & Codes | Lecture Notes

- CS231n: Deep Learning for Computer Vision: Link

| Week # | Tuesday 1400~1550 Room 210, Teaching Hall #3 | Friday 1620~1810 Room 210, Teaching Hall #3 | Submission Deadlines |

|---|---|---|---|

| 01 | Feb 20: Lecture 01 on Course Introduction | PDF | Feb 23: Lecture 02 on Collaborative Robots | PDF | |

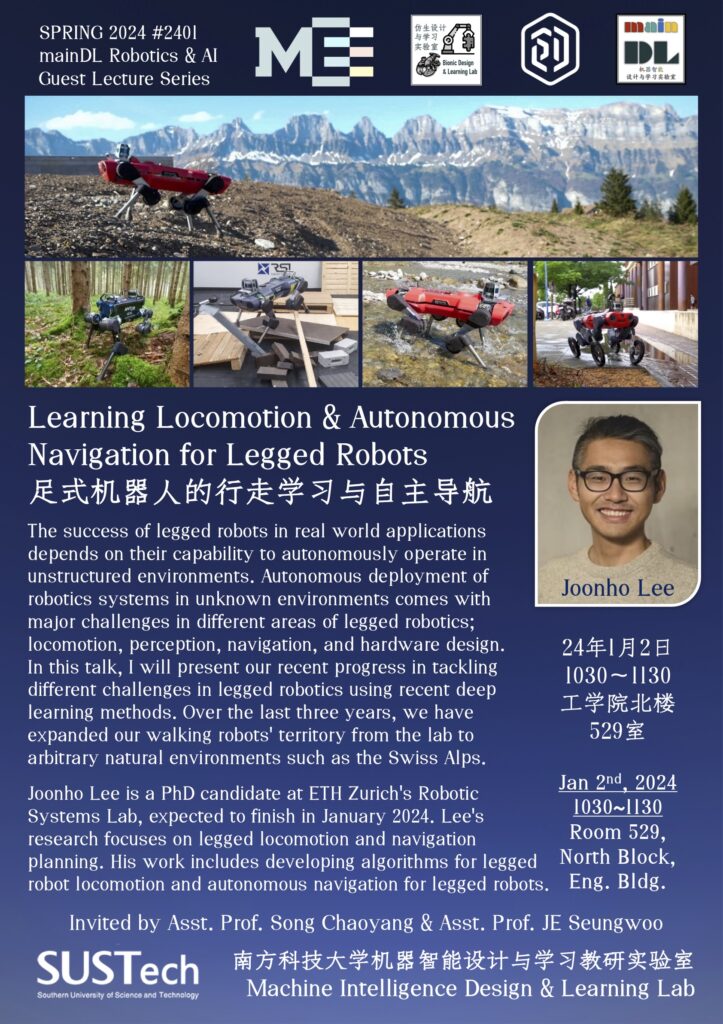

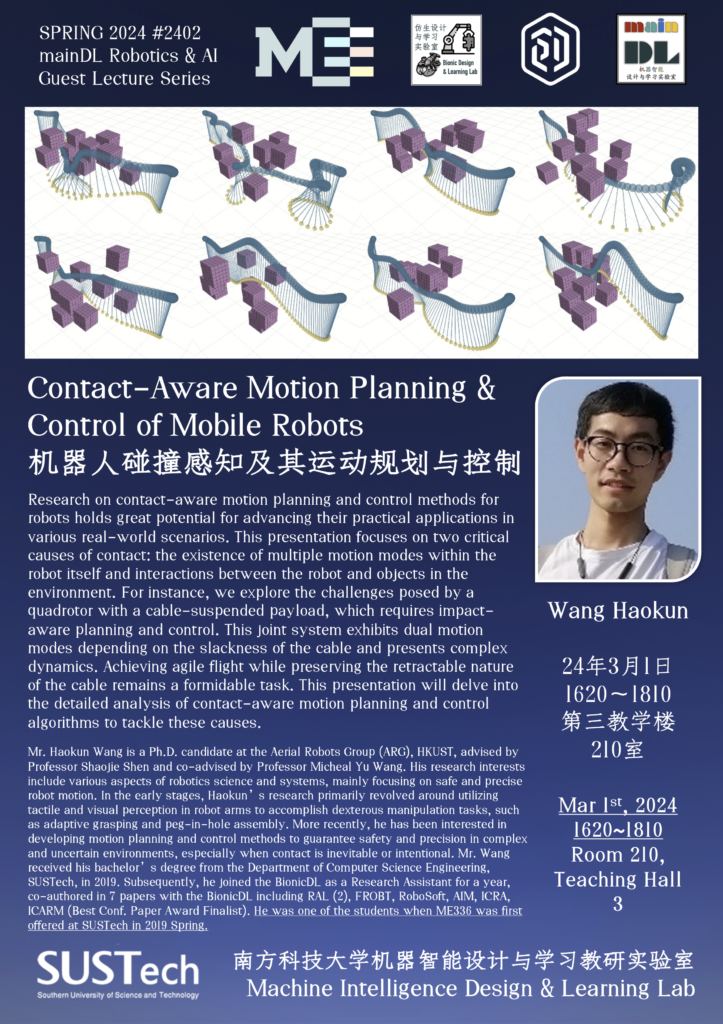

| 02 | Feb 27: Lecture 03 on Vision-based Perception | PDF | Mar 01: Guest Lecture – By Wang Haokun | |

| 03 | Mar 05: Lecture 04 on Machine Learning | PDF | Mar 08: Lecture 05 on Learning Classification | PDF | Mar 10 @ 23:30: – 1st Paper Review PowerPoint |

| 04 | Mar 12: DeepClaw Workshop A1 – By Wu Tianyu & Huang Bangchao | Mar 15: DeepClaw Workshop A2 – By Wu Tianyu & Huang Bangchao | Mar 17 @ 23:30: – DeepClaw Workshop Report 1 |

| 05 | Mar 19: 1st Paper Review – Team 2: Paper | PDF | PowerPoint – Team 6: Paper | PDF | PowerPoint – Team 7: Paper | PDF | PowerPoint | Mar 22: Lecture 06 on Neural Networks | PDF | Mar 24 @ 23:30: – Project Proposal PowerPoint |

| 06 | Mar 26: Project Proposal Presentation – Team 1: PowerPoint | PDF – Team 2: PowerPoint | PDF – Team 3: PowerPoint | PDF – Team 4: PowerPoint | PDF – Team 5: PowerPoint | PDF – Team 6: PowerPoint | PDF – Team 7: PowerPoint | PDF – Team 8: PowerPoint | PDF – Team 9: PowerPoint | PDF | Mar 29: Lecture 07 on Convolutional Networks | PDF | |

| 07 | Apr 02: DeepClaw Workshop B1 – By Wu Tianyu & Huang Bangchao | – By Wu Tianyu & Huang Bangchao | Apr 07 @ 23:30: – 2nd Paper Review PowerPoint – DeepClaw Workshop Report 2 |

| 08 | Apr 09: Lecture 08 on Regularization | PDF | Apr 12: 2nd Paper Review – Team 3: Paper | PDF | PowerPoint – Team 4: Paper | PDF | PowerPoint – Team 8: Paper | PDF | PowerPoint | Apr 14 @ 23:30: – Project Milestone PDF |

| 09 | Apr 16: Project Milestone Presentation – Team 1: PDF – Team 2: PDF – Team 3: PDF – Team 4: PDF – Team 5: PDF – Team 6: PDF – Team 7: PDF – Team 8: PDF – Team 9: PDF | Apr 19: Lecture 09 on Optimization| PDF | |

| 10 | Apr 23: Lecture 10 on Markovian Modeling | PDF | Apr 26: Lecture 11 on Markovian Learning | PDF | |

| 11 | Apr 30: Lecture 12 on Manipulation Learning | PDF | ||

| 12 | May 07: DeepClaw Workshop C1 – By Wu Tianyu & Huang Bangchao | May 10: Lecture 13 on ChatGPT for Robotics | PDF | May 12 @ 23:30: – 3rd Paper Review PowerPoint |

| 13 | May 14: 3rd Paper Review – Team 1: Paper | PDF | PowerPoint – Team 5: Paper | PDF | PowerPoint – Team 9: Paper | PDF | PowerPoint | May 17: Final Project Preparation | |

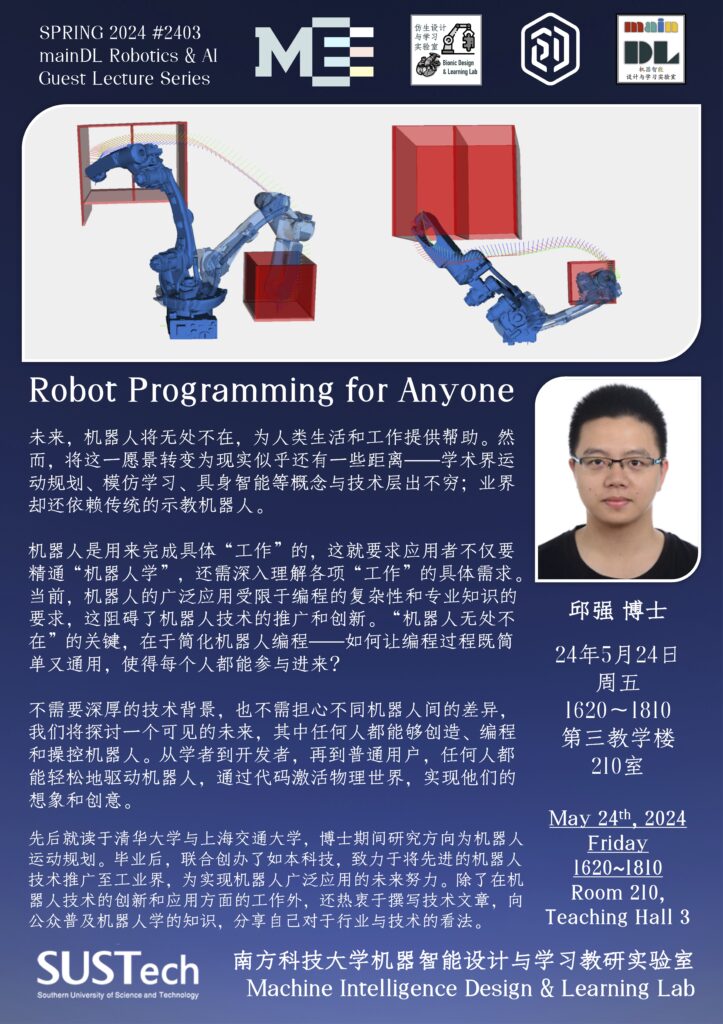

| 14 | May 21: Final Project Preparation | May 24: Guest Lecture – By Dr. Qiu Qiang | |

| 15 | May 28: Final Project Preparation | May 31: Final Project Preparation | Jun 02 @ 23:30: – Final Report and Codes |

| 16 | Jun 04: Feedback on Final Report | Jun 07: Final Spotlight Presentation – Team 1: Report | Video | Code – Team 2: Report | Video | Code – Team 3: Report | Video | Code – Team 4: Report | Video | Code – Team 5: Report | Video | Code – Team 6: Report | Video | Code – Team 7: Report | Video | Code – Team 8: Report | Video | Code – Team 9: Report | Video | Code | Jun 06 @ 23:30: – Spotlight Presentation Video |

Student Team and Group Formulation

This course comprises four modules: Machine Learning, Deep Networks, Regularization & Optimization, and Advanced Topics. Each module will be completed within four weeks, which involves the following.

- 4 Main Lecture Sessions by the Lead Instructor

- 2 DeepClaw Workshop Sessions by Teaching Assistants

- 1 Project Presentation Session by the Students

- 1 Paper Review Session by the Students

We will assign a set of the DeepClaw toolkit for two students as a pair for the workshop session.

6 students will form a team for the paper review and project presentation sessions. Register your team here.

DeepClaw Workshops

DeepClaw is a set of tools developed by the SUSTechDL group over the past few years to support the teaching and research on robot learning, where we believe that the involvement of robotic hardware should play a central role in bridging simulation and reality for manipulation learning problems. We originally developed it as a reconfigurable workstation for vision-based robotic picking at the SIR Group at Monash University (FROBT 2018). After transitioning to SUSTech, we evolved the system to include a set of APIs to facilitate communication with various robotic hardware. We streamlined the mechanical design using standard aluminum extrusion systems (AIM 2020). With the growing student base of ME336 and various hardware integration constraints, we developed an evolved version using a set of soft robotic tongs for more efficient data collection in grasping tasks (FROBT 2022). In the latest version, we introduced further integration with tactile learning to refine the system and make it portable and low-cost (CoRL 2021, unpublished). Recently, we published a paper to fully describe its theoretical foundation and robotic applications (MatDes, 2024) and submitted another paper to introduce its applications in teaching robot learning (ICARM2024, Submitted). The DeepClaw Project won the 2023 UNESCO-ICHEI Higher Education Digitalisation Pioneer Case Award. The students are at the center during the iterative development of DeepClaw at SUSTechDL. Since Dr. Song and Dr. Wan developed the original version, many students were involved in the follow-up development. Almost all students at SUSTechDL are engaged in the development to some extent. Please refer to this page for key developers and milestones.

This semester, we will introduce the latest development of the DeepClaw system to our students and use it as a teaching tool to assist you while learning the ME336 course. We planned three workshop sessions to cover topics in the following areas to be delivered by the Teaching Assistants. One set of the DeepClaw toolkit will be provided to two or three students for practice.

- DeepClaw Workshops A1 & A2 (Related to Project Assignment #1)

- DeepClaw Workshops B1 & B2 (Related to Project Assignment #2)

- DeepClaw Workshops C1 & C2 (No Project Assignment)

Paper Reviews

Starting this semester, we will introduce a paper review session for the students to conduct a systematic literature review on robot learning research through student presentations and in-class discussions. The general format is adapted from Dr. Zhu Yuke’s course. Each student team should expect at least one presentation regarding their assignment paper reading. To ensure the quality and clarity of the presentations, we expect the students to

- Read the assigned papers thoroughly and gain a good understanding before making the presentation slides.

Template: [PowerPoint] - Email the slides to the TAs and the instructor seven days before the presentation date for feedback and revision.

Deadline: [Link to Submission]- Mar 10 @ 23:30: 1st Paper Review PowerPoint, to be presented on Mar 12 class

- Apr 07 @ 23:30: 2nd Paper Review PowerPoint, to be presented on Apr 09 class

- May 12 @ 23:30: 3rd Paper Review PowerPoint, to be presented on May 14 class

- Recommended Papers to Review

You can choose from the list above or search for others that suit your interest and are related to the course topic. Consult with the lead instructor if you have any questions.

Failure to email the PowerPoint on time would incur a 20% deduction on the presentation score. Each presentation should be 20min (± 2min). The presentations will be graded in the following aspects:

- Clarity of presentation (problem formulation, proposed method, key results);

- Review of prior work and the challenges addressed by this work;

- Analysis of the strengths and weaknesses of the research;

- Discussion of potential research extensions and applications;

- Response to in-class student questions.

After each presentation, we will do a 5-minute Q&A about the presentation. Three papers will be presented in each class, and then we will have a 20-minute open-ended discussion. The instructor will post the discussion questions through QQ the day before the class. The Paper Review presentation will be worth 30% of the total grade. For students who present more than once (based on availability), the final presentation grade will be the highest score of their presentations.

Useful Resources

- Tips for Giving Clear Talks, Kayvon Fatahalian

- Three Tips for Giving a Great Research Talk, Lewis, Gruber, Van Bavel, Somerville

- CoRL 2019 talk videos (day 1, day 2, day 3) and RSS 2019 talk videos

- Reviewing a CS Conference Paper, Stephen Mann

- How to Write Good Reviews for CVPR, CVPR 2019 Program Chairs

- Example reviews from the ICLR and NeurIPS conferences.

Assignment Projects

Notes on Assignment Projects

- The assignment projects aim to provide a reproducible practice experience for all students to achieve the intended learning outcomes. In other words, it is not (and never) our intention to make it too hard to complete, but in a guided way, preferably with some interesting aspects, for you to work out the assigned projects.

- It is recommended that you complete the assigned projects within the class. If necessary, you can extend the submission to the next Sunday @ 23:30.

Assignment Project 1

- Your assignment is to use the raw dataset provided here (Wu Tianyu) to build a simple model for estimating the force and torque of the soft finger network with the highest accuracy.

- More details are here.

- The input of your model should be the raw dataset, and your model’s output should be the three forces and three torques estimated.

- The evaluation metric is mainly on the prediction accuracy (%), which should be as reasonably high as possible with the lowest cost.

- You can use any model or programming language but must write the whole model independently.

Assignment Project 2

- Your assignment is to use the DeepClaw toolkit to collect action data (motion + force/torque) for a specific manipulation task, visualize and analyze the collected data, and train a model to learn the action with the highest performance.

- More details are here.

- The input of your model should be the collected raw dataset, and your model’s output should be the action (motion + force/torque) learned.

- The evaluation metric focuses on the learned action, including motion and force/torque, in representing and recreating the action in performing the task.

- For example, suppose your chosen task is a trajectory of an English character, using the collected data. In that case, the model you trained should be capable of reproducing a new path for the English character with a high success rate (%).

- We will mainly limit the task to the following scope, including manually holding the tongs with a soft object between the fingers and then moving the tongs in space while squeezing the tongs with different purposes.

- Move the tongs in 3D space: you can choose to move in any way in space as long as it is something your team has decided with a determined geometry. A few optional choices include an English letter, a Chinese character, a logo of reasonable geometry, etc.

- Assign meanings while squeezing the tongs: one way to integrate the force/torque sensing into the recorded motion data is to add extra expressions to the trajectory recorded. For example, when squeezed, the estimated changes in force and torque could represent the changing intensity, color range, trajectory size, or even on/off commands.

Course Project

For the final project, you will work as a group of 4 students to propose a feasible project idea using the tools and resources available by applying AI-based techniques to practical robot perception and decision-making problems. It consists of four components and is worth 40% of your total grade.

- Project Proposal Presentation (5%)

- Project Milestone Presentations (5%)

- Final Report and Codes (25%)

- Spotlight Presentation Video (5%)

Useful Resources

- Writing in the Sciences, Kristin Sainani (Coursera, YouTube)

- How to Write a Good CVPR Submission, Bill Freeman

- Novelist Cormac McCarthy’s Tips on How to Write a Great Science Paper, Van Savage, Pamela Yeh

A successful project topic should involve at least one, ideally both, of the two critical components: a perception component, i.e., processing raw sensory data, and a decision-making component, i.e., controlling robot actions. You can choose to use DeepClaw or RoboSuite based on your need. For example,

- Learning vision-based robot manipulation with deep reinforcement methods;

- Self-supervised representation learning of visual and tactile data;

- Model-based object pose estimation for 6-DoF grasping from RGB-D images.

Potential projects can have the following flavors:

- Improve an existing approach. You can select a paper you are interested in, reimplement it, and improve it with what you learned in the course.

- Apply an algorithm to a new problem. You will need to understand the strengths and weaknesses of an existing algorithm from research work, reimplement it, and apply it to a new problem.

- Stress test existing approaches. This kind of project thoroughly compares several existing approaches to a robot learning problem.

- Design your own approach. You develop an entirely new approach to a specific problem in these projects. Even the problem may be something that has not been considered before.

- Mix and Match approach. For these projects, you typically combine approaches that have been developed separately to address a larger and more complex problem.

- Join a research project. You can join an existing Robot Learning project with UT faculty and researchers. You are expected to articulate your own contributions in your project reports (more detail below).

You work as a team on the project, and grades will be calibrated by individual contribution. Your project may be related to research in another class project as long as consent is granted by instructors of both classes; however, you must clearly indicate in the project proposal, milestone, and final reports the exact portion of the project that is being counted for this course. In this case, you must prepare separate reports for each course and submit your final report for the other course as well.

Project Inspirations and Resources

To inspire ideas, you might also look at recent robotics publications from top-tier conferences and other resources below.

- RSS: Robotics: Science and Systems

- ICRA: IEEE International Conference on Robotics and Automation

- IROS: IEEE/RSJ International Conference on Intelligent Robots and Systems

- CORL: Conference on Robot Learning

- ICLR: International Conference on Learning Representations

- NeurIPS: Neural Information Processing Systems

- ICML: International Conference on Machine Learning

- Publications from the UT Robot Perception and Learning Lab

You may also look at popular simulated environments and robotics datasets listed below.

Simulated Environments

- robosuite: MuJoCo-based toolkit and benchmark of learning algorithms for robot manipulation

- RoboVat: Tabletop manipulation environments in Bullet Physics

- OpenAI Gym: MuJoCo-based environments for continuous control and robotics

- AI2-THOR: open-source interactive environments for embodied AI

- RLBench: robot learning benchmark and learning environment built around V-REP

- CARLA: self-driving car simulator in Unreal Engine 4

- AirSim: a simulator for autonomous vehicles built on Unreal Engine / Unity

- Interactive Gibson: an interactive environment for learning robot manipulation and navigation

- AI Habitat: simulation platform for research in embodied artificial intelligence

Robotics Datasets

- Dex-Net: 3D synthetic object model dataset for object grasping

- RoboTurk: crowdsourced human demonstrations in simulation and real world

- RoboNet: video dataset for large-scale multi-robot learning

- YCB-Video: RGB-D video dataset for model-based 6D pose estimation and tracking

- nuScenes: large-scale multimodal dataset for autonomous driving

Project Proposal

The project proposal should be one paragraph (300-400 words). Your project proposal should describe the following:

- (20%) What is the problem that you will be investigating? Why is it interesting?

- (20%) What reading will you examine to provide context and background?

- (20%) What data will you use? If you are collecting new data, how will you do it?

- (20%) What method or algorithm are you proposing? If there are existing implementations, will you use them, and how? How do you plan to improve or modify such implementations? You don’t have to have an exact answer at this point, but you should have a general sense of how you will approach the problem you are working on.

- (20%) How will you evaluate your results? Qualitatively, what kind of results do you expect (e.g., plots or figures)? Quantitatively, what kind of analysis will you use to evaluate and/or compare your results (e.g., what performance metrics or statistical tests)?

Submission: [Deadline: Mar 17 @ 23:30] | [Proposal Template] | [Link for Submission] | Only one person on your team should submit.

Project Milestone

Your project milestone report should be between 2-3 pages using the RSS template in LaTeX. The following is a suggested structure for your report:

- Title, Author(s)

- Introduction: Introduce your problem and the overall plan for approaching your problem

- Problem Statement: Describe your problem precisely, specifying the dataset to be used, expected results, and evaluation

- Literature Review: Describe important related work and their relevance to your project

- Technical Approach: Describe the methods you intend to apply to solve the given problem

- Intermediate/Preliminary Results: State and evaluate your results up to the milestone

Submission: [Deadline: Apr 14 @ 23:30] | [Milestone Template] | [Link for Submission] | Only one person on your team should submit.

Final Report

Your final write-up must be between 6-8 pages (8 pages max) using the RSS template, structured like a paper from a robotics conference. Please use this template so we can fairly judge all student projects without worrying about altered font sizes, margins, etc. After the class, we will post all the final reports online so you can read about each others’ work. If you do not want your write-up to be posted online, please let us know when submitting it. The following is a suggested structure for your report and the rubric we will follow when evaluating reports. You don’t necessarily have to organize your report using these sections in this order, but that would likely be a good starting point for most projects.

- Title, Author(s)

- Abstract: Briefly describe your problem, approach, and key results. Should be no more than 300 words.

- Introduction (10%): Describe the problem you are working on, why it’s important, and an overview of your results

- Related Work (10%): Discuss published work on your project. How is your approach similar or different from others?

- Data (10%): Describe the data or simulation environment you are working with for your project. What type is it? Where did it come from? How much data are you working with? How many simulation runs did you work with? Did you have to do any preprocessing, filtering, or other special treatment to use this data in your project?

- Methods (30%): Discuss your approach to solving the problems you set up in the introduction. Why is your approach the right thing to do? Did you consider alternative approaches? You should demonstrate that you have applied ideas and skills built up during the quarter to tackling your problem of choice. It may be helpful to include figures, diagrams, or tables to describe your method or compare it with other methods.

- Experiments (30%): Discuss the experiments you performed to demonstrate that your approach solves the problem. The exact experiments will vary depending on the project. Still, you might compare with previously published methods, perform an ablation study to determine the impact of various components of your system, experiment with different hyperparameters or architectural choices, use visualization techniques to gain insight into how your model works, discuss common failure modes of your model, etc. You should include graphs, tables, or other figures to illustrate your experimental results.

- Conclusion (5%): Summarize your key results – what have you learned? Suggest ideas for future extensions or new applications of your ideas.

- Writing / Formatting (5%): Is your paper clearly written and nicely formatted?

- Supplementary Material is not counted toward your 6-8 page limit and is submitted as a separate file. Your supplementary material might include the following:

- Source code (if your project proposed an algorithm or code that is relevant and important for your project).

- Cool videos, interactive visualizations, demos, etc.

- The entire PyTorch/TensorFlow Github source code.

- Any code that is larger than 10 MB.

- Model checkpoints.

- A computer virus.

Submission: [Deadline: Jun 02 @ 23:30] | [Final Report Template] | [Link for Submission] | Only one person on your team should submit.

- Your report PDF should list all authors who have contributed to your work, enough to warrant a co-authorship position. This would include people not enrolled in ME336, such as faculty/advisors if they sponsored your work with funding or data, significant mentors (e.g., Ph.D. students or postdocs who coded with you, collected data with you, or helped draft your model on a whiteboard). All authors should be listed directly underneath the title on your PDF. Include a footnote on the first page indicating which authors are not enrolled in ME336. All co-authors should have their institutional/organizational affiliation specified below the title.If you have non-ME336 contributors, you will be asked to describe the following:

- Specify the involvement of non-ME336 contributors (discussion, writing code, writing paper, etc.). For an example, please see the author’s contributions to AlphaGo (Nature 2016).

- Specify whether the project has been submitted to a peer-reviewed conference or journal. Include the full name and acronym of the conference (if applicable). For example, Neural Information Processing Systems (NIPS). This only applies if you have already submitted your paper/manuscript and it is under review as of the report deadline.

- Any code used as a base for projects must be referenced and cited in the body of the paper. This includes assignment code, finetuning example code, open-source, or Github implementations. You can use a footnote or full reference/bibliography entry.

- If you are using this project for multiple classes, submit the other class PDF as well. Remember, using the same final report PDF for multiple classes is not allowed.

- In summary, include all contributing authors in your PDF; include detailed non-ME336 co-author information; tell us if you submitted to a conference, cite any code you used, and submit your dual-project report.

Spotlight Talk

You will be able to present your awesome work to the instructor and other students in the last week of class. This resembles the spotlight talks at large AI conferences, such as CVPR, NeurIPS, RSS, and CoRL. See an example spotlight video in RSS 2018. Each team is required to submit an MP4 video of the slides with a resolution of 1280×720 preferred. This will enable us to load all talks onto the same laptop without configuration or format issues while allowing presenters to use whatever graphics or video tools they choose to generate the presentation. Presentations should be limited to 6 minutes and 55 seconds, with the next speaker’s video starting automatically at the 7-minute mark. If your video is longer than 6:55, it will be truncated. Please see the CVPR Presenter Instructions page for more details about converting presentation slides to videos. We will send out information about uploading the presentation slides and videos as the deadline nears. For each project team, the spotlight talk can be presented by one member or multiple. The spotlight is worth 5% of the total grade and will be graded with the same criteria as the in-class paper presentations.

Submission: [Deadline: Jun 06 @ 23:30] | [Link for Submission] | Only one person on your team should submit.